Why using AMQP to make μservices communicate?

Many microservices architecture use HTTP as communication protocol. It is mainstream, really well tooled, and basically every language / platform has everything needed to run successfully such an architecture.

Yet, there are many cases where HTTP is not ideal:

- it is a synchronous protocol, so any asynchronous interaction is a bit difficult to model.

- whenever the service you try to call is down, the interaction results in an error (and requires retry strategy)

- if you need to broadcast a piece of data to unknown receivers (aka notification or event), you have to add some complex subscription management

- if you add a new use case based on an existing interaction, you have to change the message sender

At Lectra, we are developping a services platform, and each service can virtually interact with each other. Moreover, many of our APIs are asynchronous by nature, receiving commands and publishing events. For these reasons, we choose to use RabbitMQ as our communication broker, for all our internal communications.

What does an AMQP API look like?

Unlike the HTTP world, the AMQP world does not seem to have standards such as OpenAPI, and there is nothing like REST. If you are aware of such things, please let us know!

So, we had to make choices: AMQP is a programmable protocol, so we needed to standardize how to build a topology, what are the messages schemas, and so on. This is not the purpose of this article, but here are some of our choices:

- all the payloads are JSON text (AMQP is a binary protocol)

- we can use RPC (aka query-response) or command-event API styles

- routing keys have a standard format

- some headers are standards and mandatory (such as the user at the origin of the request)

Based on that specification, we needed tools to test our APIs.

The tooling

Currently, there is no tool to help testing AMQP APIs (again, if you know anything related, please let us know!). For sure, we can write tests using our favourite language / test library, such as JUnit, but it is a highly repetitive task, with lot of boilerplate code. We may then factor out a common library, but our teams use different languages and platforms, such as Java, Node.js, .Net, ... Not everyone is fluent in all languages, and porting tools is a very difficult and time-consuming task.

At the beginning, each team used to develop its own tooling to test the APIs. One of the teams had the idea to use Cucumber to implement those APIs tests, as it was easier for the QA engineer to master.

Enter Kendo

Back in 2017, some of us discovered a very interesting tool for HTTP-based APIs: KarateDSL. It allows you to write a feature file, in a programming language agnostic syntax, and does not require a deep knowledge of any of them. Moreover, it provides a DSL (Domain Specific Language) for HTTP interactions, and can run it without any further effort:

Scenario Outline:

# note the 'text' keyword instead of 'def'

* text query =

"""

{

hero(name: "<name>") {

height

mass

}

}

"""

Given path 'graphql'

And request { query: '#(query)' }

And header Accept = 'application/json'

When method post

Then status 200

Examples:

| name |

| John |

| Smith | After this idea, we created Kendo. Kendo is written in Java and based on Cucumber, and allows writing a complete suite of tests interacting with an AMQP API without any coding. It was born out of the refactoring of one of the tests suites of the team which used Cucumber to test its APIs, and finally extracted to its own project. We can even run it using Docker, so that no specific install is needed (non-Java teams are not forced to install Java)!

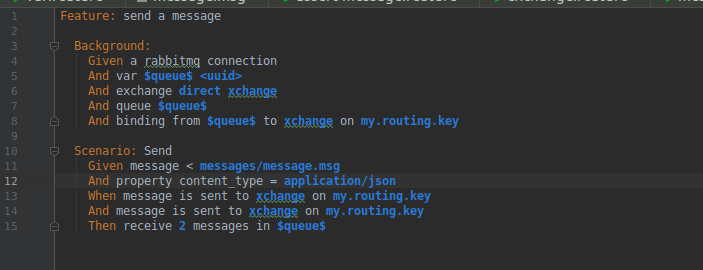

For example, this is one of our real tests (some names are modified for confidentiality reasons):

Feature: compute and register rated usage for WonderApp

Background:

Given a rabbitmq connection

And exchange usage

And exchange usage.computed

And var $tenant$ <uuid>

And initial credit for $tenant$

And var $queue$ <uuid>

And queue $queue$

And binding from $queue$ to usage.computed on unused

Scenario Outline: Send a message with :

- a successful usage

- an interrupted order

Given var $orderId$ <uuid>

And message < messages/<message>

And property content_type = application/json

And property correlation_id = <corrid>

When message is sent to usage on usage.command.create

Then sleep for 2s

And credit for $tenant$ has increased and equal to <creditpoint>

And receive 1 message in $queue$

And json $.payload.points == int <creditpoint>

And property correlation_id == <corrid>

Examples:

| message | creditpoint | corrid |

| success.msg | 12 | 12345-6789-0006 |

| success-interrupted.msg | 18 | 12345-6789-0009 |

This file is a Cucumber feature file (you can have syntax highlighting in your IDE!), and all the underlying code is packaged in Kendo, so that you don't need to write code. Most interactions you can have with RabbitMQ are modelled, but if a feature is not yet available, or you need an unrelated feature (such as call a REST API, or setup some files), you can simply import Kendo as a jar dependency in a Maven project, and start writing your own Cucumber steps to fulfil your needs. Even better, you can create a module to be imported as a jar dependency, et voilà!

How does this work? The test runner is given an environment variable for the RabbitMQ connection, then the test suite (all features) are run using JUnit. If you need more information about that, just take a look at Cucumber's doc, as it is entirely based on it. Messages payloads are loaded from template files, so that the feature file is kept short, and they support variable expansion. Finally, assertions become AssertJ assertions in the Kendo runtime.

The future

Today, Kendo is widely used at Lectra, and its usage will probably reach all the teams eventually. It is now considered stable enough, and we are thinking about opensourcing it. Whenever it becomes public, we will write a more complete post about it!